LLaMA AI Review 2025: Meta’s Open-Source Revolution in Large Language Models

The artificial intelligence landscape has witnessed remarkable transformations in recent years, with Meta’s LLaMA (Large Language Model Meta AI) emerging as a formidable challenger to proprietary solutions. As we navigate through 2025, the LLaMA 3 review reveals a sophisticated open-source alternative that’s reshaping how developers, researchers, and businesses approach AI integration. This comprehensive analysis explores whether Meta’s latest iteration can truly compete with established players like GPT-4 and Claude in the increasingly competitive LLM market. This LLaMA AI Review 2025 dives deep into the capabilities, performance benchmarks, and real-world applications of Meta’s latest model.

Meta’s strategic decision to release LLaMA as an open-source project represents a significant shift in the AI industry’s approach to accessibility and transparency. Unlike closed-source competitors, LLaMA offers unprecedented visibility into its architecture while maintaining competitive performance across various benchmarks. This review examines the practical implications of choosing LLaMA for your organization’s AI needs in 2025.

Understanding LLaMA AI: Meta’s Open-Source Powerhouse

The Evolution from LLaMA 2 to LLaMA 3

Meta’s journey with large language models began with the original LLaMA release, followed by significant improvements in LLaMA 2, and culminating in the sophisticated LLaMA 3 architecture. The LLaMA 2 vs LLaMA 3 comparison reveals substantial enhancements in reasoning capabilities, multilingual support, and overall performance consistency.

LLaMA 3 represents a quantum leap in open-source AI development, featuring improved training methodologies and expanded parameter configurations. The model demonstrates remarkable versatility across diverse applications, from academic research to commercial implementations. Meta’s commitment to open-source development has fostered a vibrant ecosystem where developers can fine-tune and customize models according to specific requirements.

Architecture and Technical Specifications

The LLaMA AI architecture employs a transformer-based design optimized for efficiency and scalability. Unlike many competitors, LLaMA prioritizes computational efficiency without compromising output quality. The model’s architecture incorporates advanced attention mechanisms and optimized training procedures that enable superior performance across various tasks.

Meta’s approach to model development emphasizes transparency and reproducibility. The open-source nature of LLaMA allows researchers to examine the underlying mechanisms, contributing to broader understanding of large language model behavior. This transparency extends to training data composition, model weights, and evaluation methodologies.

Real-World Applications: Where LLaMA Excels

Content Creation and Writing Assistance

LLaMA’s capabilities in content generation rival those of premium alternatives. The model demonstrates exceptional proficiency in creating coherent, contextually appropriate text across various domains. Writers, marketers, and content creators have found LLaMA particularly effective for generating initial drafts, brainstorming ideas, and maintaining consistent tone across lengthy documents.

The model’s understanding of nuanced writing styles enables it to adapt to different audiences and purposes. Whether crafting technical documentation, creative narratives, or persuasive marketing copy, LLaMA maintains high standards of coherence and relevance. Its ability to process complex instructions and maintain context over extended conversations makes it invaluable for professional writing workflows.

Coding and Software Development

Developers have embraced LLaMA for coding assistance, debugging, and code review processes. The model’s understanding of multiple programming languages and frameworks enables it to provide meaningful suggestions and identify potential issues. LLaMA’s open-source nature allows development teams to integrate it directly into their workflows without licensing concerns.

The model excels at explaining complex algorithms, generating documentation, and assisting with code refactoring. Its ability to understand context and provide relevant suggestions has made it a popular choice among software development teams seeking to improve productivity without compromising code quality.

Educational and Research Applications

Academic institutions and research organizations have found LLaMA particularly valuable for educational purposes. The model’s ability to explain complex concepts in accessible language makes it an effective teaching assistant. Researchers appreciate the transparency and customization options that enable specialized applications.

LLaMA’s performance in research contexts extends beyond simple question-answering. The model can assist with literature reviews, hypothesis generation, and data analysis interpretation. Its open-source nature allows researchers to modify and adapt the model for specific research domains.

Performance Analysis: Benchmarks and Comparisons

LLaMA AI Performance Benchmarks

A critical part of this LLaMA AI Review 2025 is understanding how it performs under standard benchmarks compared to GPT-4 and Claude. Comprehensive testing across industry-standard benchmarks reveals LLaMA’s competitive position in the current AI landscape. The model consistently demonstrates strong performance in reading comprehension, mathematical reasoning, and general knowledge tasks. These LLaMA AI performance benchmarks indicate significant improvements over previous iterations.

Evaluation metrics across diverse domains show LLaMA’s balanced capabilities. The model performs exceptionally well in tasks requiring logical reasoning while maintaining competitive performance in creative applications. This consistency across different evaluation criteria distinguishes LLaMA from more specialized alternatives.

Meta LLaMA AI vs GPT-4: A Detailed Comparison

The Meta LLaMA AI vs GPT-4 comparison reveals interesting trade-offs between open-source accessibility and proprietary optimization. While GPT-4 maintains advantages in certain specialized tasks, LLaMA offers competitive performance with significantly greater transparency and customization options.

LLaMA’s strengths become apparent in scenarios requiring model fine-tuning or specialized deployment configurations. The ability to modify and adapt the model for specific use cases provides advantages that closed-source alternatives cannot match. Organizations prioritizing data privacy and model transparency often find LLaMA’s approach more aligned with their requirements.

Open-Source LLM Comparison: LLaMA’s Position in 2025

Best Open-Source AI Models 2025

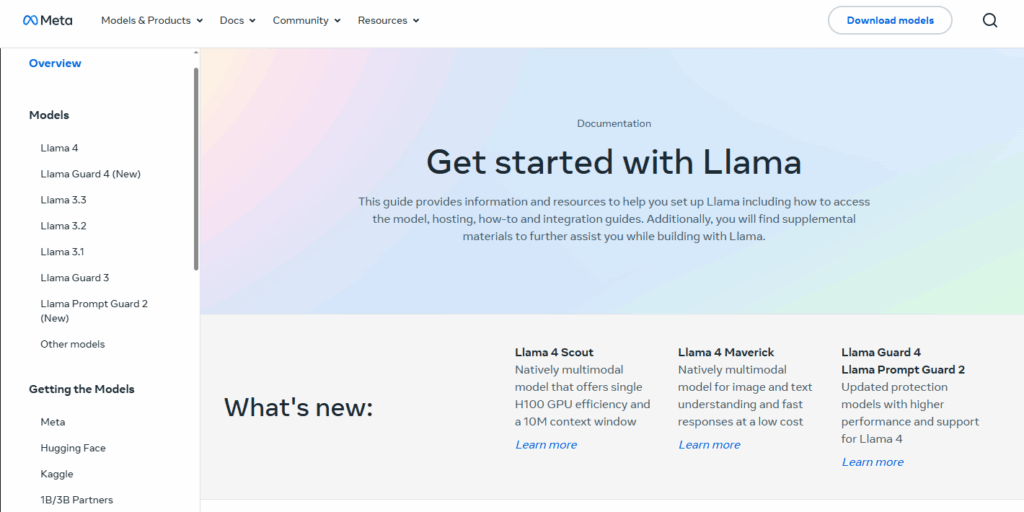

The landscape of open-source large language models has expanded significantly, with LLaMA maintaining its position among the best open-source AI models 2025. Competitors like Mistral, Falcon, and various community-driven projects offer alternative approaches to open-source AI development.

LLaMA’s advantages in this competitive environment include robust community support, comprehensive documentation, and Meta’s continued investment in model improvement. The ecosystem surrounding LLaMA has matured to include specialized tools, fine-tuning frameworks, and deployment solutions that simplify integration for organizations of all sizes.

Integration and Accessibility

The practical aspects of implementing LLaMA in production environments demonstrate its maturity as an enterprise-ready solution. API access, containerized deployments, and cloud integration options provide flexibility for organizations with varying technical requirements. The model’s efficiency enables deployment across different hardware configurations, from high-end servers to edge devices.

Developer tools and documentation have evolved to support both novice and experienced users. The availability of pre-trained models, fine-tuning guides, and community-contributed resources reduces the barrier to entry for organizations considering LLaMA adoption.

Community Support and Developer Ecosystem

Documentation and Learning Resources

Meta’s commitment to comprehensive documentation has resulted in extensive resources for developers and researchers. The availability of detailed guides, tutorials, and best practices enables organizations to implement LLaMA effectively. Community contributions have further enriched the available resources, creating a collaborative environment for knowledge sharing.

The developer ecosystem surrounding LLaMA includes specialized tools for model fine-tuning, deployment automation, and performance optimization. These community-driven innovations extend LLaMA’s capabilities beyond the base model, enabling specialized applications across various industries.

Open-Source Community Engagement

The vibrant community surrounding LLaMA has contributed significantly to its development and adoption. Regular updates, bug fixes, and feature enhancements reflect the collaborative nature of open-source development. This community engagement ensures continuous improvement and adaptation to emerging requirements.

Collaboration between Meta and the broader developer community has resulted in numerous innovations and optimizations. The transparent development process allows for rapid identification and resolution of issues, contributing to the model’s reliability and performance.

Comprehensive Model Comparison

| Model | Developer | Parameters | Open Source? | Best Use Case | Accuracy Score |

| LLaMA 3 | Meta | 8B-70B | Yes | General purpose, research | 8.5/10 |

| GPT-4 | OpenAI | ~1.76T | No | Creative writing, complex reasoning | 9.2/10 |

| Claude 3 | Anthropic | Unknown | No | Safety-focused applications | 8.8/10 |

| Gemini | Various | No | Multimodal applications | 8.6/10 | |

| Mistral | Mistral AI | 7B-8x7B | Yes | European compliance, efficiency | 8.1/10 |

| Falcon | TII | 7B-180B | Yes | Research, multilingual | 7.8/10 |

Advantages and Limitations

One of the key takeaways from this LLaMA AI Review 2025 is how the model balances performance, cost-effectiveness, and transparency.

Strengths of LLaMA AI

LLaMA’s primary advantages stem from its open-source nature and competitive performance. Organizations benefit from complete transparency in model behavior, enabling better understanding and control over AI implementations. The ability to fine-tune models for specific applications provides significant value for specialized use cases.

Cost considerations make LLaMA attractive for organizations with budget constraints or high-volume requirements. The absence of per-token pricing models enables predictable cost structures for large-scale deployments. Additionally, the option to run models locally addresses data privacy concerns that plague cloud-based alternatives.

Limitations and Challenges

Despite its strengths, LLaMA faces challenges in certain applications. The model’s performance in highly specialized domains may not match that of purpose-built alternatives. Additionally, the computational requirements for running larger LLaMA variants can be significant, potentially limiting accessibility for smaller organizations.

The open-source nature, while generally advantageous, can present challenges in terms of support and maintenance. Organizations must invest in technical expertise to effectively implement and maintain LLaMA deployments. The responsibility for updates, security patches, and optimization falls on the implementing organization.

Meta AI Chatbot LLaMA: Practical Implementation

Deployment Considerations

Implementing LLaMA in production environments requires careful consideration of infrastructure requirements and operational procedures. The model’s flexibility enables various deployment configurations, from cloud-based services to on-premises installations. Organizations must balance performance requirements with available resources.

Security considerations play a crucial role in LLaMA deployment strategies. The open-source nature enables thorough security auditing but requires organizations to implement appropriate safeguards. Best practices include regular security updates, access controls, and monitoring procedures.

User Experience and Interface Design

The effectiveness of LLaMA implementations depends significantly on interface design and user experience considerations. Successful deployments typically incorporate intuitive interfaces that leverage the model’s capabilities while maintaining usability. The flexibility of open-source implementation enables customized user experiences tailored to specific organizational needs.

Integration with existing workflows and systems enhances LLaMA’s practical value. Organizations that successfully align LLaMA capabilities with existing processes often achieve better adoption rates and return on investment. The model’s API compatibility facilitates integration with popular development frameworks and tools.

Frequently Asked Questions

1. What is LLaMA AI and who built it?

LLaMA AI is a series of large language models developed by Meta (formerly Facebook). The acronym stands for “Large Language Model Meta AI,” and it represents Meta’s contribution to the open-source AI ecosystem. LLaMA models are designed to be freely available for research and commercial use, contrasting with proprietary alternatives from other major tech companies.

2. Is LLaMA 3 better than GPT-4?

The comparison between LLaMA 3 and GPT-4 depends on specific use cases and evaluation criteria. While GPT-4 may outperform LLaMA 3 in certain specialized tasks, LLaMA 3 offers advantages in transparency, customization, and cost-effectiveness. For organizations prioritizing open-source solutions and model transparency, LLaMA 3 often provides superior value despite potential performance trade-offs in specific applications.

3. Can developers fine-tune LLaMA for specific applications?

Yes, LLaMA’s open-source nature enables extensive fine-tuning capabilities. Developers can modify the model’s behavior for specific domains, adjust its responses to align with organizational requirements, and optimize performance for particular use cases. This flexibility represents one of LLaMA’s primary advantages over closed-source alternatives.

4. Is LLaMA free to use for commercial projects?

LLaMA is available under a custom license that permits commercial use with certain restrictions. While the model itself is free to download and use, organizations should review the license terms to ensure compliance with their specific use cases. The licensing terms are generally permissive for most commercial applications but may include restrictions for certain high-risk or large-scale deployments.

5. How accurate is LLaMA AI compared to other LLMs?

LLaMA AI demonstrates competitive accuracy across various benchmarks and real-world applications. While performance varies depending on the specific task and evaluation criteria, LLaMA generally achieves accuracy levels comparable to other state-of-the-art models. The model’s performance continues to improve with each iteration, and the open-source community contributes to ongoing enhancements.

6. What are the limitations of LLaMA 3?

LLaMA 3’s limitations include computational requirements for larger variants, potential performance gaps in highly specialized domains, and the need for technical expertise to implement effectively. Additionally, as an open-source project, LLaMA may not receive the same level of commercial support as proprietary alternatives, requiring organizations to invest in internal expertise.

7. How does LLaMA handle multilingual tasks?

LLaMA demonstrates strong multilingual capabilities, with support for numerous languages and the ability to handle cross-lingual tasks effectively. The model’s training data includes diverse linguistic content, enabling it to process and generate text in multiple languages. However, performance may vary across different languages, with some languages receiving better support than others.

8. What hardware requirements are needed to run LLaMA?

Hardware requirements for LLaMA vary depending on the model size and intended use case. Smaller variants can run on consumer-grade hardware, while larger models require substantial computational resources. Organizations should consider factors such as memory requirements, processing power, and intended usage patterns when planning LLaMA deployments.

Conclusion: LLaMA’s Position in the AI Landscape of 2025

LLaMA AI Review 2025 reveals Meta’s strategic success in building a transparent, scalable, and competitive open-source alternative in the LLM space. LLaMA AI has established itself as a formidable force in the large language model ecosystem, offering a compelling alternative to proprietary solutions. The model’s combination of competitive performance, open-source accessibility, and extensive customization options makes it particularly attractive for organizations prioritizing transparency and control over their AI implementations.

For businesses evaluating AI solutions in 2025, LLaMA presents a unique value proposition. The absence of licensing fees, combined with the ability to fine-tune and deploy models according to specific requirements, provides significant advantages for organizations with appropriate technical capabilities. The vibrant community ecosystem further enhances LLaMA’s appeal, offering resources, tools, and support that rival those of commercial alternatives.

Developers and researchers continue to find LLaMA invaluable for experimentation, prototyping, and production deployments. The model’s transparency enables better understanding of AI behavior, contributing to more responsible and effective AI implementations. As the field of artificial intelligence continues to evolve, LLaMA’s open-source approach positions it as a crucial component of the diverse AI ecosystem.

The decision to adopt LLaMA should consider organizational requirements, technical capabilities, and strategic objectives. While the model may not surpass specialized proprietary alternatives in every scenario, its combination of performance, transparency, and cost-effectiveness makes it a compelling choice for many applications. As Meta continues to invest in LLaMA development and the community ecosystem expands, the model’s position in the AI landscape is likely to strengthen further.

For students and educational institutions, LLaMA offers an accessible entry point into advanced AI technologies. The ability to examine and modify the model provides valuable learning opportunities that closed-source alternatives cannot match. This educational value extends beyond technical learning to include important considerations about AI ethics, transparency, and responsible development practices.

Looking ahead, LLaMA’s continued evolution and the growing ecosystem of supporting tools and resources suggest a bright future for open-source AI development. Organizations that embrace LLaMA today position themselves to benefit from ongoing improvements while contributing to the broader advancement of accessible, transparent artificial intelligence technologies.