7 Best AI Tools for Large Language Models (LLMs): A Comprehensive Guide for 2025

The artificial intelligence landscape has transformed dramatically in 2025, with large language models becoming the cornerstone of modern AI applications. As organizations and developers increasingly rely on these powerful language models, the demand for sophisticated AI tools that can effectively manage, deploy, and optimize LLMs has skyrocketed. Whether you’re building chatbots, developing content generation systems, or creating complex AI-powered applications, choosing the right AI tools for large language models can make the difference between project success and failure.

The current ai language models trends show a shift toward more accessible, efficient, and specialized tools that cater to diverse use cases. From open source LLM tools that provide flexibility and cost-effectiveness to enterprise-grade LLM fine-tuning platforms that offer advanced customization capabilities, the ecosystem has matured significantly. This comprehensive guide explores the seven best ai tools for large language models, examining their features, capabilities, and how they can enhance your AI development workflow.

Understanding Large Language Models: The Foundation of Modern AI

Before diving into the specific tools, it’s crucial to understand what is a large language model and why these llms have become so influential in the AI landscape. A large language model (LLM) is a sophisticated artificial intelligence system trained on vast amounts of text data to understand, generate, and manipulate human language. These models, often containing billions of parameters, can perform a wide range of language tasks including text generation, translation, summarization, and question-answering.

The llms meaning extends beyond simple text processing – they represent a fundamental shift in how machines understand and interact with human language. Modern language models like GPT-4, Claude, and Google’s Gemini have demonstrated remarkable capabilities in reasoning, creativity, and problem-solving. The best llms today can engage in complex conversations, write code, analyze documents, and even perform mathematical calculations with impressive accuracy.

Understanding llm ai examples helps illustrate their practical applications. These range from customer service chatbots that provide 24/7 support to content creation tools that assist writers and marketers. Large language models examples include virtual assistants, code completion tools, educational tutoring systems, and sophisticated translation services. As these models continue to evolve, the tools that support their development and deployment become increasingly important.

The 7 Best AI Tools for Large Language Models

1. Hugging Face Transformers: The Open-Source Powerhouse

Hugging Face has established itself as the go-to platform for anyone working with language models. Their comprehensive ecosystem includes not just a vast repository of pre-trained models but also powerful tools for fine-tuning, deployment, and collaboration. The platform hosts thousands of models, from compact local models suitable for edge devices to powerful models that rival commercial offerings.

Key Features:

- Access to over 100,000 pre-trained models including famous models like BERT, GPT, and T5

- Integrated model hub with version control and collaboration features

- Support for multiple frameworks including PyTorch, TensorFlow, and JAX

- Built-in tokenization, preprocessing, and evaluation tools

- Seamless integration with popular ML frameworks

Use Cases: Hugging Face excels in research environments, educational settings, and rapid prototyping. It’s particularly valuable for developers who need quick access to state-of-the-art models without the overhead of training from scratch. The platform supports everything from mini pre models for lightweight applications to large-scale transformer architectures for complex tasks.

Pros:

- Extensive model library with clear documentation

- Active community contributing to model development

- Free tier with generous usage limits

- Excellent API documentation and tutorials

- Strong support for both cloud and local deployment

Why It’s Great for LLMs: Hugging Face democratizes access to cutting-edge language models, making it possible for developers at any level to leverage state-of-the-art AI. The platform’s emphasis on open-source development and community collaboration has made it an essential tool for anyone working with llms ai.

2. Ollama: Local LLM Deployment Made Simple

Ollama has revolutionized how developers deploy and run large language models locally. This tool addresses the growing need for privacy-conscious AI deployment and reduces dependency on cloud services. The system works through both command line and graphical interfaces, supporting macOS, Linux, and Windows. Users pull models from Ollama’s library, including Llama 3.2 for text tasks, Mistral for code generation, Code Llama for programming, LLaVA for image processing, and Phi-3 for scientific work.

Key Features:

- One-command model installation and execution

- Support for popular models including Llama, Mistral, and Phi-3

- Local inference without internet dependency

- REST API for easy integration

- Multi-platform compatibility

- Automatic GPU acceleration when available

Use Cases: Ollama is ideal for developers who prioritize data privacy, need offline capabilities, or want to reduce API costs. It’s particularly valuable for building applications that handle sensitive information or require consistent performance without internet connectivity.

Pros:

- Simple installation and setup process

- No usage limits or API costs

- Complete data privacy and security

- Excellent performance on modern hardware

- Active development with regular updates

Why It’s Great for LLMs: Ollama bridges the gap between complex model deployment and practical application development. It makes running sophisticated language models as simple as running any other software, opening up new possibilities for local AI applications.

3. LangChain: The LLM Application Framework

LangChain has emerged as the premier framework for building applications powered by large language models. This Python library provides a comprehensive set of tools for chaining together different AI components, managing prompts, and creating complex AI workflows. It’s become essential for developers building production-ready LLM applications.

Key Features:

- Modular components for prompt management, memory, and chains

- Integration with numerous llm model providers

- Support for vector databases and retrieval systems

- Built-in tools for agent creation and tool usage

- Extensive documentation and community examples

- Support for both synchronous and asynchronous operations

Use Cases: LangChain excels in building conversational AI applications, document analysis systems, and complex AI workflows. It’s particularly valuable for creating applications that need to combine multiple AI capabilities or integrate with external data sources.

Pros:

- Comprehensive framework covering all aspects of LLM development

- Strong community support with extensive examples

- Flexible architecture supporting various use cases

- Regular updates with new features and integrations

- Good documentation and learning resources

Why It’s Great for LLMs: LangChain abstracts the complexity of building LLM applications while providing the flexibility needed for sophisticated use cases. It’s become the standard framework for developers serious about building production-ready AI applications.

4. Weights & Biases: MLOps for Language Models

Weights & Biases (W&B) has become indispensable for teams working with large language models at scale. This platform provides comprehensive experiment tracking, model monitoring, and collaboration tools specifically designed for machine learning workflows. For LLM development, W&B offers specialized features that address the unique challenges of working with language models.

Key Features:

- Experiment tracking with automatic logging

- Model versioning and artifact management

- Real-time monitoring of training metrics

- Collaborative workspaces for team development

- Integration with popular ML frameworks

- Advanced visualization tools for model analysis

Use Cases: W&B is essential for teams conducting LLM research, fine-tuning models, or deploying language models in production. It’s particularly valuable for organizations that need to track experiments, compare model performance, and maintain reproducible ML workflows.

Pros:

- Comprehensive experiment tracking and analysis

- Excellent collaboration features for teams

- Strong integration with existing ML workflows

- Detailed visualization and reporting capabilities

- Reliable performance monitoring tools

Why It’s Great for LLMs: W&B addresses the unique challenges of working with large language models, including long training times, large model sizes, and complex evaluation metrics. It’s become essential for serious LLM development projects.

5. Axolotl: Advanced LLM Fine-Tuning Platform

Axolotl stands out as one of the leading open-source tools for LLM fine-tuning, offering researchers and developers a powerful platform for customizing language models for specific tasks. This tool has gained significant traction in the AI community for its flexibility and comprehensive feature set.

Key Features:

- Support for various fine-tuning techniques including QLoRA and LoRA

- Integration with popular model architectures

- Configurable training pipelines

- Multi-GPU and distributed training support

- Built-in evaluation and validation tools

- Extensive configuration options for advanced users

Use Cases: Axolotl is ideal for researchers and developers who need to fine-tune models for specific domains or tasks. It’s particularly valuable for creating specialized models that outperform general-purpose alternatives in specific use cases.

Pros:

- Highly configurable and flexible

- Strong support for advanced fine-tuning techniques

- Active development with regular updates

- Comprehensive documentation and examples

- Cost-effective alternative to commercial solutions

Why It’s Great for LLMs: Axolotl democratizes access to advanced fine-tuning techniques, making it possible for researchers and developers to create highly specialized language models without extensive infrastructure investments.

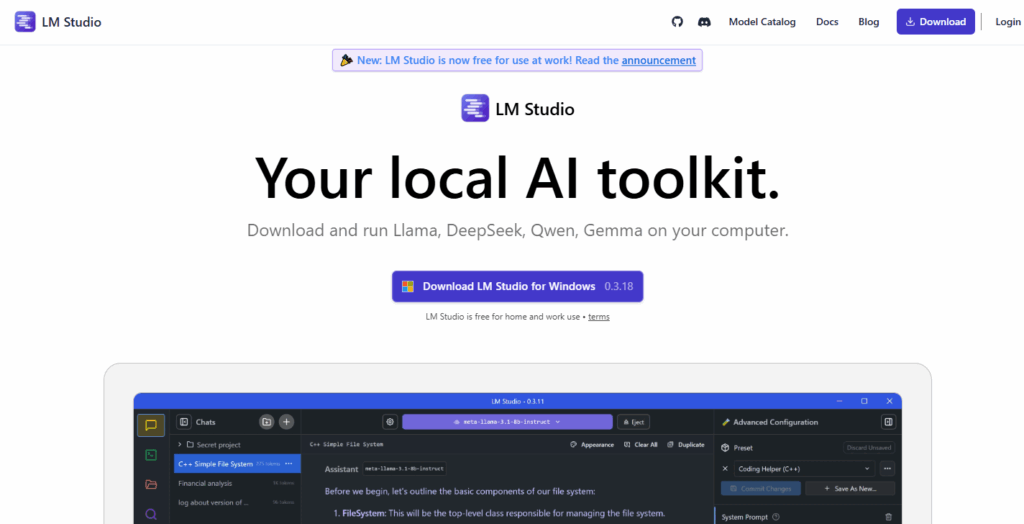

6. LM Studio: User-Friendly Local LLM Interface

LM Studio has gained popularity as a user-friendly solution for running large language models locally. LM Studio is a popular choice for developers and researchers who need an intuitive interface for experimenting with different models and configurations.

Key Features:

- Intuitive graphical interface for model management

- Support for multiple model formats and architectures

- Built-in chat interface for model testing

- Performance monitoring and optimization tools

- Model comparison and evaluation features

- Easy model switching and configuration

Use Cases: LM Studio is perfect for developers who want to experiment with different models, researchers conducting comparative studies, and teams that need a user-friendly interface for local model deployment.

Pros:

- Intuitive user interface

- No command-line expertise required

- Good performance on consumer hardware

- Active development with regular updates

- Strong community support

Why It’s Great for LLMs: LM Studio makes local LLM deployment accessible to users who prefer graphical interfaces over command-line tools. It’s particularly valuable for teams that include non-technical members who need to interact with language models.

7. Unsloth: Optimized LLM Training and Inference

Unsloth has emerged as a powerful tool for optimizing LLM training and inference, focusing on performance improvements and memory efficiency. It simplifies the fine-tuning process through an intuitive interface and advanced optimization tools like Unsloth, making it accessible for both beginners and experts in the field.

Key Features:

- Memory-efficient training algorithms

- Accelerated inference optimizations

- Support for popular model architectures

- Integration with existing training pipelines

- Automatic mixed precision training

- Dynamic batching for improved throughput

Use Cases: Unsloth is ideal for developers working with limited computational resources, teams that need to optimize inference costs, and organizations looking to maximize the efficiency of their LLM deployments.

Pros:

- Significant performance improvements

- Reduced memory requirements

- Easy integration with existing workflows

- Strong documentation and examples

- Active development with regular optimizations

Why It’s Great for LLMs: Unsloth addresses one of the biggest challenges in LLM development – computational efficiency. It makes it possible to work with larger models on smaller hardware and reduces the cost of running language models in production.

Comprehensive Comparison Table

| Tool | Pricing | Ease of Use | Deployment Type | LLM Support | Best For |

| Hugging Face | Free/Paid tiers | Moderate | Cloud/Local | Extensive | Research, Prototyping |

| Ollama | Free | Easy | Local | Good | Privacy, Offline Use |

| LangChain | Free | Moderate | Cloud/Local | Excellent | Application Development |

| Weights & Biases | Free/Paid | Moderate | Cloud | Excellent | Team Collaboration |

| Axolotl | Free | Advanced | Local/Cloud | Good | Fine-tuning |

| LM Studio | Free/Paid | Easy | Local | Good | Local Experiments |

| Unsloth | Free | Moderate | Local/Cloud | Good | Performance Optimization |

Real-World Examples of Large Language Models in Action

Understanding llm ai examples helps illustrate the practical applications of these tools. In the healthcare sector, language models power medical chatbots that provide preliminary diagnoses and health information. Financial institutions use llms ai for fraud detection, automated customer service, and document analysis. Educational platforms leverage these models for personalized tutoring and automated grading systems.

E-commerce companies implement language models for product recommendations, customer service automation, and content generation. The top model implementations often combine multiple AI tools to create sophisticated systems that can handle complex user interactions and provide accurate, contextually relevant responses.

Content creation has been revolutionized by large language models examples that can generate articles, marketing copy, and creative content at scale. News organizations use these models for automated reporting, while marketing teams leverage them for personalized campaign content and social media management.

Key Trends in AI Language Models for 2025

The ai language models trends in 2025 show several significant developments. There’s a growing emphasis on efficiency and specialized models rather than simply scaling up parameter counts. Mini pre models are gaining popularity for specific tasks where full-scale models would be overkill. The focus has shifted toward creating more efficient, task-specific models that can deliver excellent performance while using fewer computational resources.

Another significant trend is the democratization of AI model development. Open source LLM tools have made it possible for smaller organizations and individual developers to create sophisticated AI applications without massive infrastructure investments. This has led to a proliferation of specialized models and applications across various industries.

The integration of multimodal capabilities is also becoming standard, with the best image reasoning model capabilities being incorporated into language models. This convergence of text and visual processing is opening up new possibilities for AI applications that can understand and generate both textual and visual content.

Frequently Asked Questions

1. What is the difference between LLMs and traditional language models?

Large language models differ from traditional language models primarily in scale and capability. While traditional models might have millions of parameters, LLMs contain billions or even trillions of parameters. This massive scale enables LLMs to understand context better, generate more coherent text, and perform complex reasoning tasks. Traditional models were often task-specific, while LLMs can handle multiple tasks without specific training for each one.

2. Which are the best ai tools for large language models free options?

Several excellent free tools are available for working with large language models. Hugging Face offers a generous free tier with access to thousands of models. Ollama provides completely free local deployment capabilities. LangChain is open-source and free to use. For those interested in best ai tools for large language models github, repositories like Axolotl and various Hugging Face projects provide extensive free resources for LLM development.

3. How do I choose between cloud-based and local models?

The choice between cloud-based and local models depends on your specific requirements. Cloud-based solutions offer scalability, access to the latest models, and minimal setup requirements. They’re ideal for applications that need to handle variable loads or require cutting-edge capabilities. Local models provide better privacy control, no ongoing API costs, and independence from internet connectivity. Consider factors like data sensitivity, cost constraints, performance requirements, and technical expertise when making this decision.

4. What is the best approach for fine-tuning LLMs?

Fine-tuning LLMs requires careful consideration of your data, computational resources, and specific use case. Start with a pre-trained model that’s closest to your domain. Use techniques like QLoRA or LoRA for efficient fine-tuning if you have limited resources. Ensure your training data is high-quality and representative of your target use case. Tools like Axolotl and platforms that support LLM fine-tuning can significantly simplify this process.

5. How can I evaluate the performance of different LLMs?

Evaluating LLM performance requires both quantitative metrics and qualitative assessment. Common metrics include perplexity, BLEU scores for translation tasks, and task-specific benchmarks. However, human evaluation remains crucial for assessing factors like coherence, helpfulness, and appropriateness. Tools like Weights & Biases can help track and compare different metrics across models and experiments.

6. What are the cost considerations for implementing LLM tools?

Cost considerations for LLM tools vary significantly based on your approach. API-based solutions charge per token or request, which can scale with usage. Self-hosted solutions require upfront hardware investments but have lower ongoing costs. Consider factors like expected usage volume, model size requirements, and the need for fine-tuning when evaluating costs. Many organizations find a hybrid approach most cost-effective, using free or low-cost tools for development and more powerful solutions for production deployments.

Conclusion: Choosing the Right AI Tools for Large Language Model Success in 2025

The landscape of AI tools for large language models has never been more diverse or powerful. As we’ve explored in this comprehensive guide, each tool serves specific needs and use cases, from the open-source flexibility of Hugging Face to the local deployment simplicity of Ollama. The key to success lies in understanding your specific requirements and choosing tools that align with your goals, resources, and technical expertise.

The trends in 2025 point toward more efficient, specialized, and accessible AI tools that democratize access to advanced language model capabilities. Whether you’re building a simple chatbot or developing complex AI applications, the tools discussed in this guide provide the foundation for success. The best llm ai implementations often combine multiple tools, leveraging the strengths of each to create comprehensive solutions.

For developers and organizations venturing into LLM development, starting with free tools like Hugging Face or Ollama provides an excellent foundation for learning and experimentation. As projects mature and requirements become more complex, integrating specialized tools for fine-tuning, monitoring, and optimization becomes crucial for maintaining competitive advantage.

The future of AI development depends on choosing the right combination of tools that can adapt to evolving needs while providing the reliability and performance required for production applications. By understanding the capabilities and limitations of each tool, developers can make informed decisions that lead to successful AI implementations and drive innovation in their respective fields.

The investment in learning these tools and understanding their capabilities will pay dividends as the AI landscape continues to evolve. Whether you’re working with Google LLM models, exploring new base AI models, or developing custom solutions, having the right tools and knowledge will be essential for success in the rapidly advancing field of artificial intelligence.